Something is wrong with the current wave of AI chatbots. Why is everyone building a wrapper on top of ChatGPT? Together with our partners at Datag Inc., we built our first production ready LLM app: Avva. Avva is an AI Veterinary platform built by chaining together multiple LLMs. Avva is not just a Q&A platform, but an intelligent companion. It compiles comprehensive medical histories, enables personalized care advice, and even integrates with pet-related documents and health records. All to provide a more holistic view of your pet's well-being. Additionally, we want to provide more value to our users by integrating recommendations for trusted products, services, and local pet clinics.

Exploring the Potential of LLMs

Our project ventured into uncharted territory, exploring the application of Large Language Models (LLMs) in pet care. We recognized the vast potential of LLMs, but also acknowledged the unknowns surrounding optimal user interface designs and user behavior. To navigate these uncertainties, we adopted a multifaceted approach. This involved fine-tuning ChatGPT, experimenting with open-source models, and rethinking interaction flows. We embraced the experimental nature of this new domain by leveraging custom LLMs and supportive agents.

Overcoming the Hurdle of Memory and Context

A central challenge we faced was the development of custom memory and context handling mechanisms. This is crucial for maintaining a continuous and meaningful conversation with users, especially when building a comprehensive medical history that now incorporates vital pet-related documents and health records. Let's go more into our solutions for memory and context handling in later sections of this blog, highlighting the strategies employed to overcome these challenges.

Aligning the AI with Our Vision

For Avva to fulfill its role effectively, we established clear expectations for its behavior. We aimed to empower it to confidently provide users with practical information, minimizing unnecessary referrals to veterinarians. Concise and direct responses were also a priority, alongside the ability to ask relevant questions to gather crucial details.

Additionally, we sought to imbue Avva with a touch of playfulness, reflecting its identity as a smart raccoon, without compromising its professionalism. Achieving this desired alignment proved to be a multifaceted endeavor. It involved utilizing a combination of various LLMs, prompting techniques, fine-tuning processes, and the development of custom mechanisms to handle memory and extended contexts. Simply relying on "good" pre-existing models wasn't enough; we needed to actively shape Avva's behavior to reach a satisfactory level of alignment with our vision.

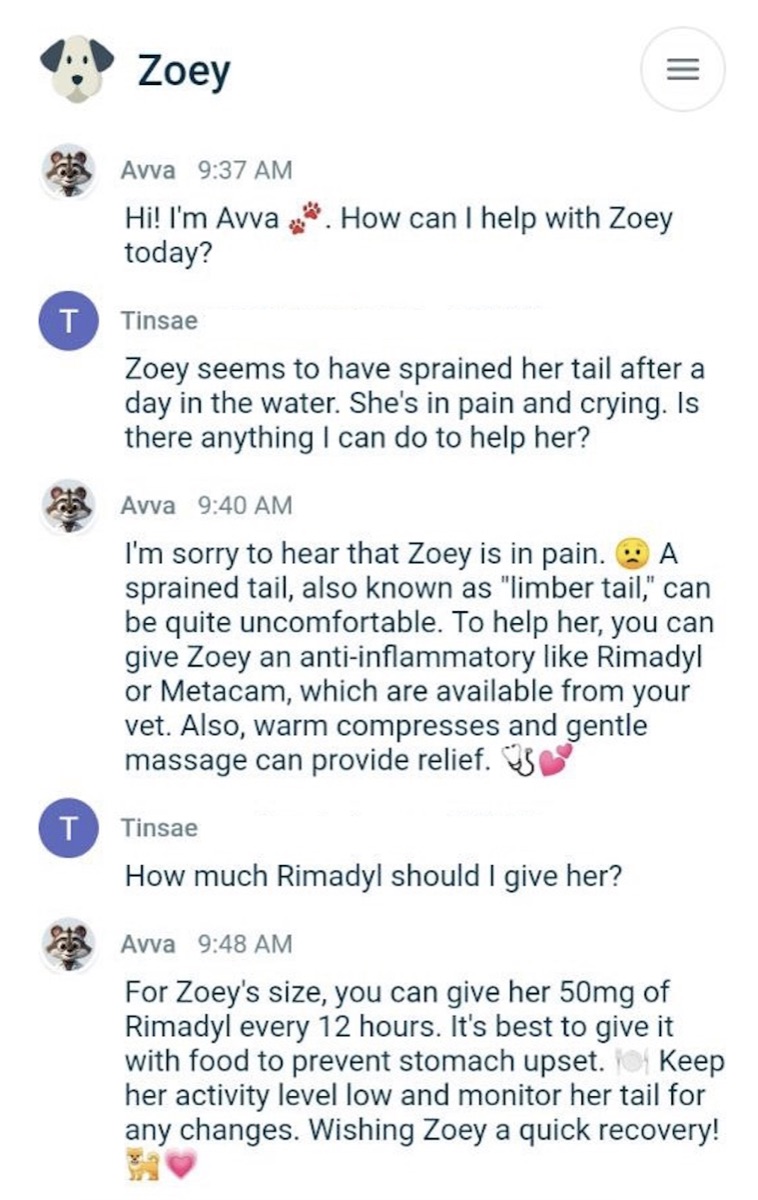

Below is an example short conversation with recent version of Avva. Please note that the bot has basic context already (how much Zoey weights, and medical history from past conversations). It also gives short and actionable advice while keeping a friendly tone:

Addressing the Constraints of Existing Models

While publicly available large language models (LLMs) like ChatGPT and Claude have revolutionized many fields, they inherently possess limitations, particularly in areas demanding in-depth expertise, such as medical advice.

Many users have likely encountered frustrating disclaimers like "For details, ask your lawyer" or "I can't give medical advice," which, although responsible, can significantly hinder applications requiring specific guidance.

Recognizing this challenge, we actively explored strategies that transcended simply prompting these models. Our efforts encompassed fine-tuning ChatGPT and experimenting with various open-source models to navigate these constraints. Through a process of trial and error, we were able to distinguish between "nerfed" conversations, characterized by a lack of depth and specificity, and "good" interactions that yielded valuable insights.

Choosing the Right UX: Single Stream vs. Multiple Interactions

While designing the UX, we had a decision to make. Would users engage in a single, continuous conversation per pet, or would multiple, separate conversations be the norm? This seemingly simple choice held significant implications, influencing every aspect of our application, from the user interface and experience to the underlying technology and model integration.

Multiple Conversations: Focused Interactions but Limited Insights

- Pros: Each conversation stays focused on a specific topic, ensuring clarity and relevance.

- Cons: Users need to repeat information in each new session, as the system lacks memory of past interactions, hindering the ability to infer deeper context and offer personalized advice. Implementation is simpler due to the absence of complex memory management mechanisms.

Single Continuous Chat: Personalized Experience with Technical Hurdles

- Pros: Enables the system to draw insights from previous conversations, resulting in a more personalized and coherent user experience. This fosters deeper understanding and allows for tailored advice.

- Cons: The main challenge lies in mitigating the potential for irrelevant or misleading information ("information noise") to influence future interactions. However, intelligent summarization and memory management techniques can filter and retain only relevant domain-specific information.

Ultimately, our decision to pursue a single continuous chat per pet was driven by the desire to deliver a more personalized, efficient, and innovative user experience. While acknowledging the technical challenges, we firmly believe this approach significantly enhances the platform's ability to provide tailored advice and support to pet parents.

Maintaining Context in Continuous Conversations

Opting for a single, continuous conversation per pet necessitated innovative approaches to manage memory and context effectively. We sought to strike a delicate balance: retaining crucial information for personalized interactions while avoiding unnecessary clutter that could bloat the conversation history.

While the user experiences a seamless, continuous dialogue, our system employs strategic techniques behind the scenes. Upon initiating a new conversation, the user interacts with the virtual vet, receiving guidance and answers to their queries. When the user becomes inactive for more than 20 minutes or ends the session, a process triggers to collect relevant health data. This data encompasses essential aspects like vaccination status, reproductive status, diet, allergies, and any pre-existing medical conditions.

Furthermore, we established a "consultancy case list" to chronologically store simplified summaries of the pet owner's concerns and the corresponding recommendations provided by the virtual vet. This time-stamped record empowers the AI to handle time-sensitive cases more effectively. Each "consultancy case" comprises two key elements:

- Concern: A concise one-sentence summary of the pet owner's primary concern.

- Recommendation: A concise one-sentence summary of the virtual vet's recommendation.

When a user resumes the conversation after a hiatus, the system retrieves and presents the crucial information, ensuring the "Health Background" is readily available. This empowers us to initiate the conversation with a relevant prompt, such as: "You mentioned a day ago that your dog wasn't eating well. Has there been any change in their appetite?"

Leveraging Specialized Models for Optimal Performance

One of the key insights we gained from developing Avva was the power of utilizing different models for distinct tasks within the application. This approach is particularly crucial when navigating the complex interplay between cost, speed, and accuracy. Here's a breakdown of our strategic model selection:

- Fine-tuned LLM as the General Vet-Chatbot: This serves as the primary interface for user interaction, handling general inquiries and routing complex questions to specialized models. We fine-tune the LLM to ensure a comprehensive understanding of pet-related topics and facilitate natural language interactions. This fine-tuning process also allows us to empower the model to be more helpful and informative, minimizing unnecessary referrals to veterinarians for basic inquiries.

- Fine-tuned GPT-3.5 LLM for Historical and Medical Context Integration: This specialized model focuses on leveraging the pet's unique medical history and past interactions to provide tailored suggestions and insights.

- GPT-3.5 for Summarization and Medical Record Building: This model plays a crucial role in efficiently condensing past conversations and relevant data into concise summaries. These summaries populate the pet's medical records, providing a readily accessible overview of the pet's health history.

By strategically selecting and fine-tuning different models for specific tasks, we achieve a balance between accuracy, efficiency, and affordability. This multi-model approach empowers Avva to deliver a comprehensive and personalized experience for both pet parents and their furry companions.

Fine Tuning and Data Preparation

Chat Dataset for Fine Tuning

Developing a high-quality dataset for fine-tuning the main vet-bot LLM proved to be a crucial and challenging step. We addressed this by:

- Leveraging Real-World Vet Interactions: We built a proprietary dataset on real interactions between veterinarians and pet owners, ensuring its authenticity and practical relevance.

- Expert Review by Veterinary Professionals: The dataset was meticulously reviewed by qualified veterinary doctors who provided valuable insights and ensured the information's accuracy.

- Injecting Personality and Playfulness: We incorporated elements like emojis and a friendly tone into the dialogues to imbue the bot with a playful and engaging personality.

- Maintaining Cleanliness and Accuracy: Maintaining a dataset free from typos and grammatical errors was paramount, as such errors can hinder the model's learning and effectiveness.

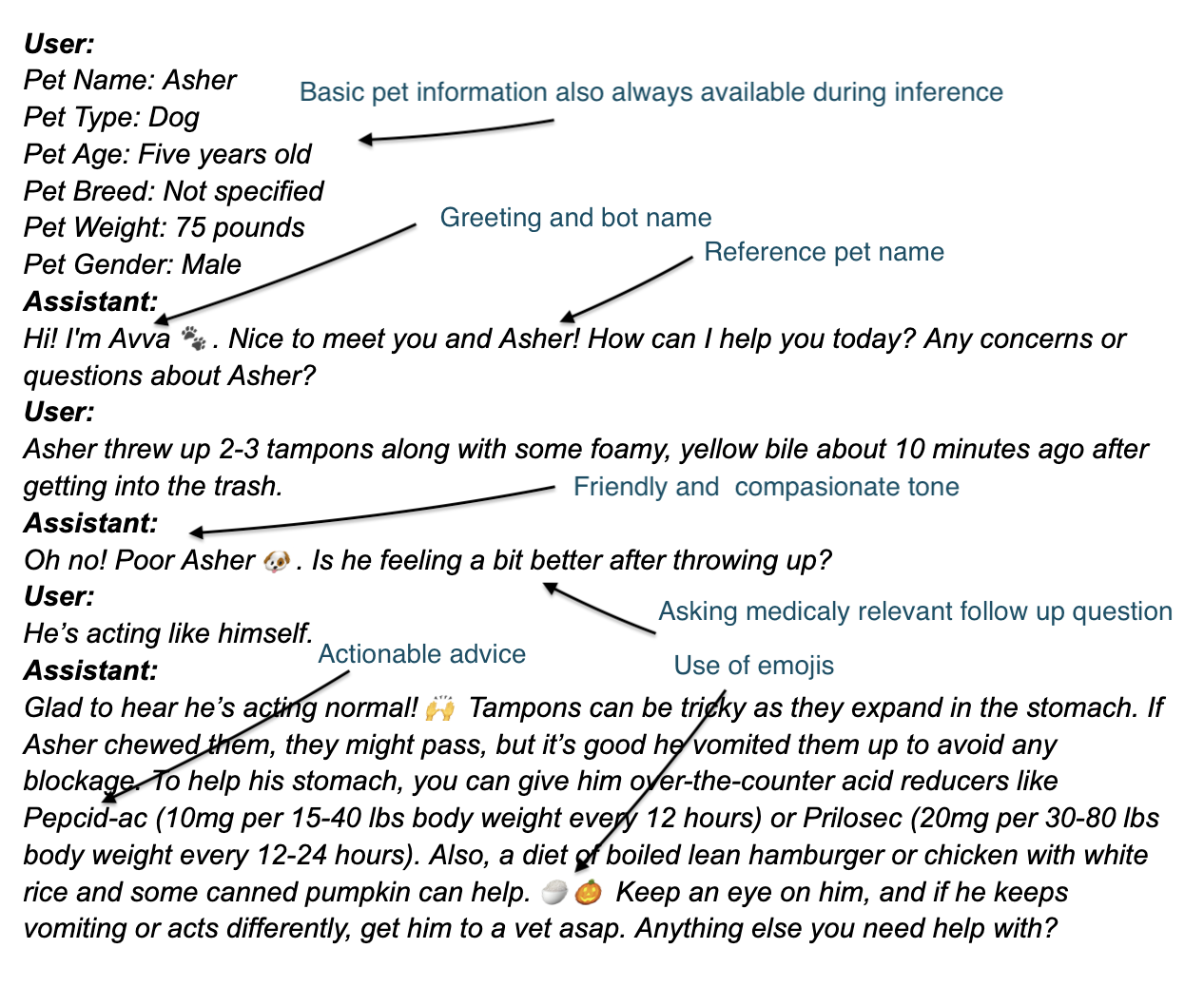

Here is a sample out of the dataset we used for fine-tuning the main LLM powering the app together with annotations of key characteristics we incorporated into it, in order to achieve specific bot behavior:

Question Suggestions Fine Tuning

Generating relevant and helpful question suggestions presents a unique challenge in the user interface. We identified several key factors:

- Accessibility: Presenting suggestions directly after the chatbot's response fosters user engagement and understanding of the available options.

- Speed and Conciseness: Ideally, these suggestions should appear promptly and be concise, typically containing less than five words.

- Contextual Relevance: The suggestions must be aligned with the ongoing conversation and the chatbot's previous response.

- Structured Output: The suggestions need to be delivered in JSON format for seamless integration with the backend infrastructure.

To address this, we employed a fine-tuning approach:

- Leveraging GPT-4 Performance: We initially investigated using GPT-4 or similar large models, as they demonstrated promising results in generating appropriate suggestions.

- Cost-Effective Fine-Tuning: However, considering the computational cost and response time of GPT-4, we opted for a more efficient strategy.

- Distillation and Fine-Tuning: We created a dataset based on GPT-4 outputs and fine-tuned a smaller, more cost-effective model (GPT-3.5) for this specific task. Distilled models can often achieve comparable performance to their larger counterparts while requiring significantly less computational resources and offering faster response times.

Here is an example of how that works in practice. Let's say the last bot message was as follows:

Assistant:

Insect bites can be uncomfortable for dogs. 🐜 To help Fluffy, you can:

1. Apply a cold compress to reduce swelling.

2. Use a gentle, pet-safe antiseptic to clean the bites.

3. Consider an over-the-counter antihistamine like Benadryl (1mg per pound of body weight every 8 hours) to relieve itching.

4. If the bites are severe or causing a reaction, consult your vet. Keep Fluffy comfortable and monitor the bites for any signs of infection. 🩹

The generated suggestions would be as follows:

- What are signs of infection?

- How to apply a cold compress?

- What antiseptic is pet-safe?

Giving relevant question suggestions to the user can lift up the user experience significantly, teach the user about the potential of the interface and/or just simply save time.

Open Source vs. Closed Source LLMs in Pet Care Applications

The landscape of large language models (LLMs) has undoubtedly undergone significant expansion in recent months, with various closed and open-source alternatives emerging alongside OpenAI's offerings. While open-source models offer undeniable advantages in terms of transparency and customizability, the question of their suitability for specific applications, particularly in the sensitive domain of pet care, remains a crucial consideration.

Challenges of Open Source LLMs in Medical Advice

While the notion of open-source models offering more freedom might seem intuitive, our experience revealed otherwise. In the context of providing medical advice, we observed that many open-source models, including those excelling in other tasks, exhibited an even greater reluctance compared to OpenAI models. This highlights the importance of careful evaluation and potential data-driven adjustments to overcome this inherent limitation.

OpenChat's Promising Potential

Among the open-source models explored, OpenChat stood out, demonstrating performance comparable to GPT-3.5-turbo after fine-tuning. This finding underscores the potential of open-source LLMs in specific scenarios, especially when considering factors like convenience and accessibility.

Looking Beyond the Surface

It's important to acknowledge that this exploration represents only a starting point. Further investigation, potentially involving alternative data designs and more extensive experimentation, could unlock the potential of other open-source models for liberating them to be more helpful in providing medical advice.

Key Learnings

- Multi-Model Architecture: Using specialized LLMs for different tasks (chat, summarization, context) proved more effective than a single general model.

- Fine-Tuning is Essential: Pre-trained models alone weren't enough - fine-tuning with domain-specific data was critical for achieving desired behavior.

- Memory Management: Custom context handling mechanisms are crucial for maintaining meaningful, continuous conversations.

- Data Quality Matters: Clean, expert-reviewed datasets significantly impact model performance and reliability.

- Model Distillation Works: Smaller fine-tuned models can match larger models' performance at lower cost and latency.

Conclusion: Exploration and Innovation

Developing Avva, the LLM-powered pet parent assistant, has been a transformative journey marked by continuous learning and adaptation. From navigating the limitations of current models to shaping a user-friendly experience with continuous conversations, each obstacle has fueled innovation and inspired novel solutions.

As we move forward, we remain dedicated to continuously refining Avva based on valuable user feedback and the ever-evolving LLM landscape. We firmly believe that Avva holds the potential to revolutionize pet care, empowering pet parents worldwide with personalized guidance and support. We are optimistic about the future of Avva and its potential contribution to creating a more informed, convenient, and accessible pet care experience for everyone.

Building LLM applications? We'd love to hear about your challenges. Get in touch to discuss your project.